Migrated from eDJGroupInc.com. Author: Greg Buckles. Published: 2014-08-18 20:00:00Format, images and links may no longer function correctly.

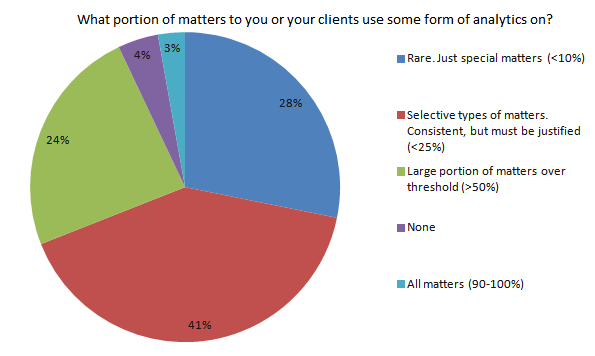

Between my Analytic Adoption surveys and my 15+ extended interviews with cutting edge litsupport, attorneys and providers actively using analytics, the vast majority of consumers and cases are not yet ready for to use PC-TAR to make relevance decisions on productions. This goes against all everything that we have seen at Legal Tech and every other marketing channel in our industry. I am not questioning the potential effectiveness of the technology or the expertise of the subject matter experts that seem to be required to manage these machine learning reviews, whether active, passive or just plain black box driven. Instead, I asked my survey respondents to tell me about what percentage of cases they actively used analytics in. The initial numbers seemed quite encouraging (as seen below) until I started my live interviews. That was when I rediscovered the old axioms about Garbage In Garbage Out (GIGO) and Lies, Damn Lies and Statistics. Remember, this is my own data I am talking about.

The survey combined survey questions asked, “What portion of matters to you or your clients use some form of analytics on?” While you could interpret the combined responses to the right to validate the widespread use of PC-TAR, you and I would be WRONG. The question was too broad and that was my fault. The interviews made it clear that the market has a much broader definition of ‘Analytics’ than the marketers pushing PC-TAR on an over-saturated audience who is tired of being told that they cannot handle their own reviews. I sought out technology agnostic service providers and in-house litsupport managers with deep eDiscovery experience who had all used PC-TAR on actual cases. Every one of them quickly differentiated between the use of analytics to prioritize or optimize collections prior to review versus the actual use of any kind of machine learning PC-TAR technology. The use of ‘Accelerated Review’ during or just post processing to prioritize, cluster or otherwise optimize the collection is now well accepted and seems to be how my survey respondents were interpreting my question.

The survey combined survey questions asked, “What portion of matters to you or your clients use some form of analytics on?” While you could interpret the combined responses to the right to validate the widespread use of PC-TAR, you and I would be WRONG. The question was too broad and that was my fault. The interviews made it clear that the market has a much broader definition of ‘Analytics’ than the marketers pushing PC-TAR on an over-saturated audience who is tired of being told that they cannot handle their own reviews. I sought out technology agnostic service providers and in-house litsupport managers with deep eDiscovery experience who had all used PC-TAR on actual cases. Every one of them quickly differentiated between the use of analytics to prioritize or optimize collections prior to review versus the actual use of any kind of machine learning PC-TAR technology. The use of ‘Accelerated Review’ during or just post processing to prioritize, cluster or otherwise optimize the collection is now well accepted and seems to be how my survey respondents were interpreting my question.

When you ask about the actual use of PC-TAR for real machine learning review, the numbers plummet. My best estimation is that only 5-7% of matters that reach the review stage (remember that most cases settle well before review) actually use some form of PC-TAR. And that level of adoptions seems to be driven primarily by a small number of critical matters under tight deadlines or by a few very large defendants who have negotiated ‘all in one’ preferred provider agreements that wrap analytics into some kind of fixed fee/rate arrangement. Are there companies that use PC-TAR on EVERY review? Yes, I found these exceptions. But they are the earliest of the adopters and do not represent the broader market. My provider interviews all concurred that we are still in an educational sales cycle and most of them have backed off from actively selling or promoting PC-TAR in the face of pressure from senior law firm partners and confused corporate customers. I will go into this and more in my 2014 Analytics research report as soon as I can find the bandwidth to complete it. I wanted to share my early conclusions while the interviews were still fresh.

Greg Buckles can be reached at Greg@eDJGroupInc.com for offline comment, questions or consulting. His active research topics include mobile device discovery, the discovery impact of the cloud, Microsoft’s 2013 eDiscovery Center and multi-matter discovery. Recent consulting engagements include managing preservation during enterprise migrations, legacy tape eliminations, retention enablement and many more.